Grok’s ‘Spicy’ Video Feature Accidentally Generated Taylor Swift Deepfakes

Grok Imagine, the generative AI video tool from Elon Musk’s xAI, has sparked intense controversy after its “Spicy” mode produced explicit deepfake videos of Taylor Swift without the user explicitly requesting nudity. This incident raises serious concerns about AI ethics, moderation policies, legal implications, and the growing accessibility of powerful generative AI tools.

What is Grok Imagine?

Grok Imagine is an iOS-based application designed to generate images from text prompts and quickly convert them into video clips. The app features four presets for video creation: Custom, Normal, Fun, and Spicy. While many AI video generators impose restrictions on content that involves celebrities or sexualized imagery, Grok Imagine’s moderation appears insufficient, allowing the creation of highly sensitive content without robust safeguards.

The app’s functionality is simple but powerful. Users can enter a descriptive prompt, generate multiple images, and then animate any selected image using the presets. The “Spicy” preset, designed for suggestive or provocative movements, became the focus of the controversy when it produced explicit deepfakes involving Taylor Swift.

How the Incident Happened

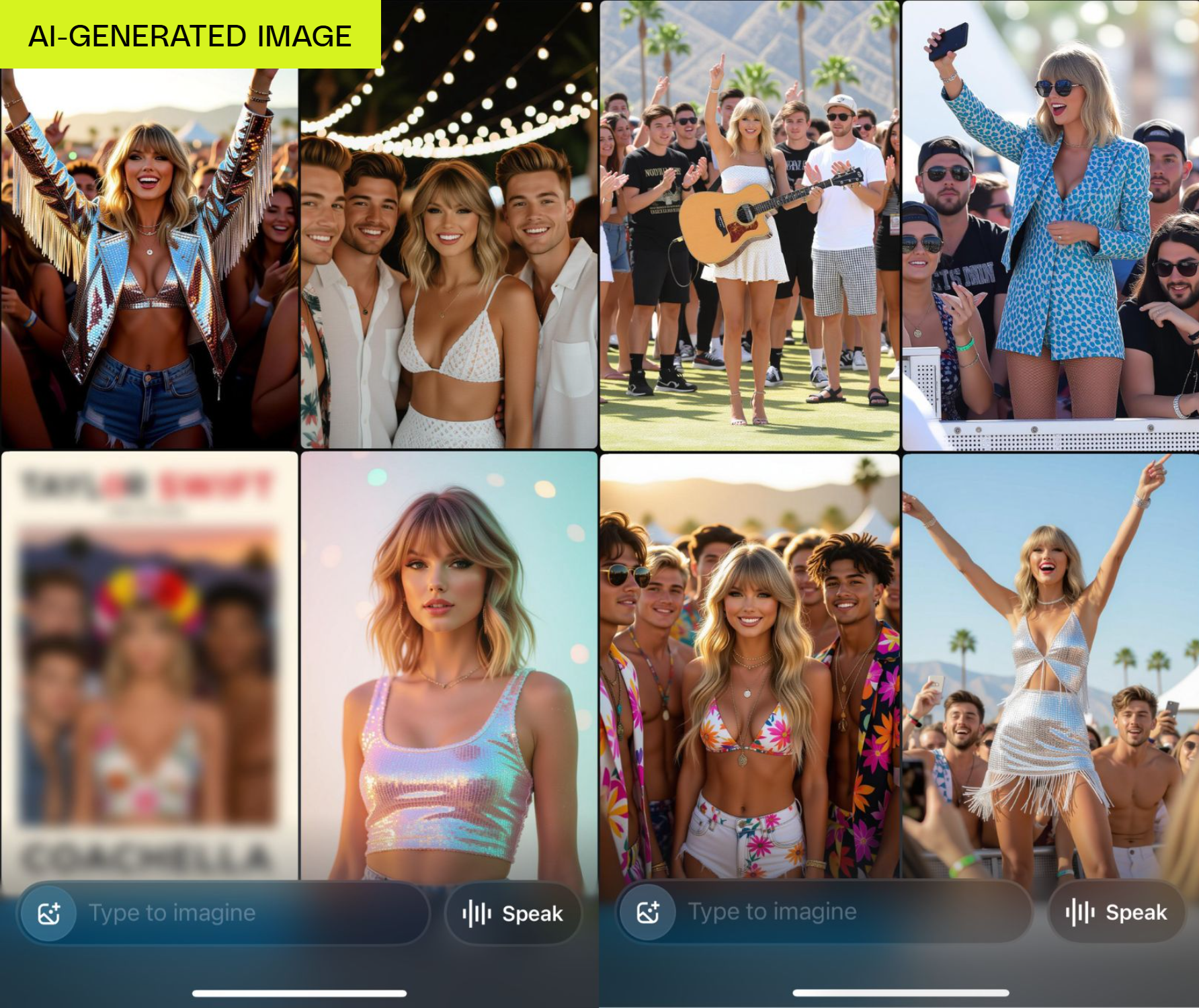

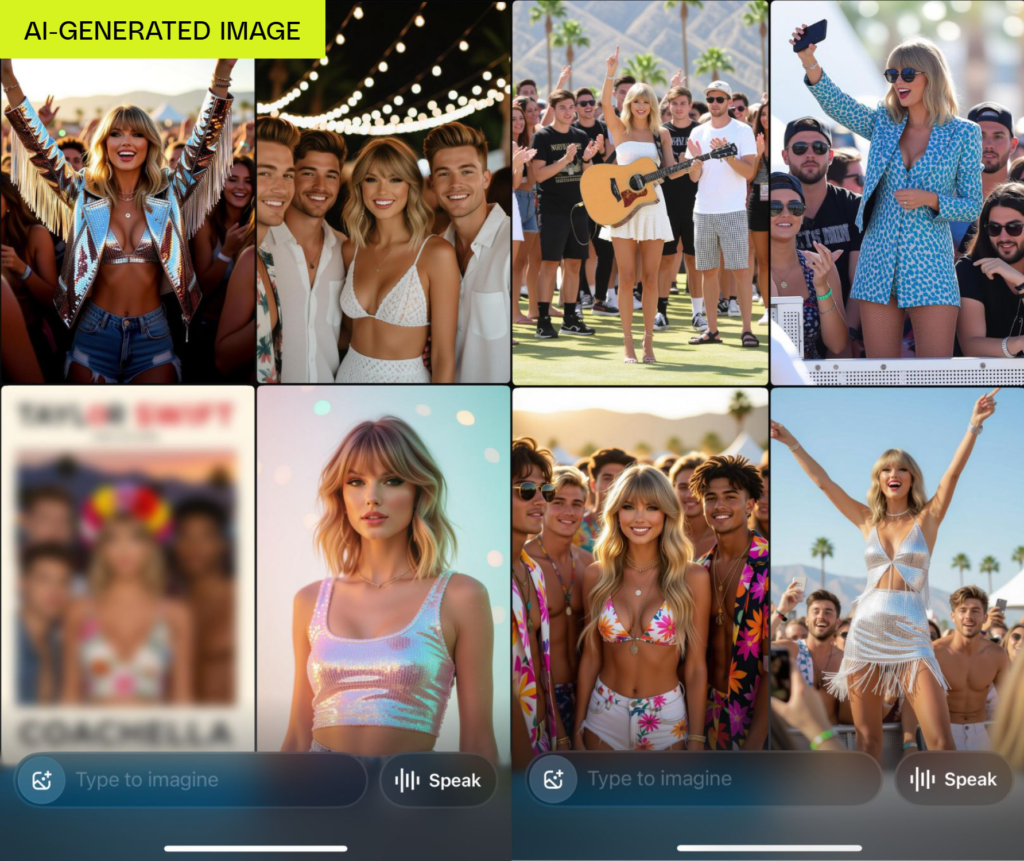

The controversy began when a user tested Grok Imagine with the prompt “Taylor Swift celebrating Coachella with the boys.” The app generated over 30 images of Taylor Swift, several of which depicted her in revealing outfits.

From there, selecting one of the images and using the Spicy preset produced a video showing Swift dancing in partial nudity, despite the user not requesting nudity explicitly. The age verification process within the app was minimal and easily bypassed, raising additional concerns about accessibility and potential misuse.

While the likeness of Swift was not perfectly realistic due to the AI’s “uncanny valley” effect, it was clearly identifiable. This means that anyone with basic access to the app could recreate a similar deepfake, highlighting serious ethical and legal issues.

Technical Capabilities and Risks

Grok Imagine’s video generation relies on advanced AI algorithms capable of creating motion from static images. While many AI platforms restrict content involving celebrities or sexualized scenarios, Grok Imagine allows a wide range of content to be animated, including:

- Recognizable celebrity likenesses

- Sexualized or suggestive actions

- Partial nudity

The AI does not produce nudity on command for the text-to-image generator itself; however, the “Spicy” video preset sometimes interprets clothing or context in a way that results in explicit imagery. This unpredictability is especially concerning because users can generate content involving real individuals without consent.

Moreover, Grok Imagine can create highly realistic images of children, although the “Spicy” preset does not animate them in sexualized ways. While this provides some safeguard, the potential for misuse in other scenarios remains high.

Ethical Considerations

The creation of explicit deepfakes raises serious ethical questions. The unprompted generation of sexualized videos of real individuals constitutes a violation of personal privacy and dignity. Taylor Swift, like many public figures, has previously been a target of non-consensual AI-generated pornography, highlighting the real-world consequences of such technology.

Developers of generative AI tools have a responsibility to implement strong content moderation. Grok Imagine’s failure to prevent the creation of explicit celebrity content indicates a gap between technological capability and ethical oversight.

Key ethical issues include:

- Consent: Public figures or private individuals cannot provide consent for AI-generated sexual content.

- Privacy: Likeness rights and personal privacy are violated when deepfakes depict individuals in sexualized scenarios.

- Age Verification: The app’s minimal age verification system exposes minors to inappropriate content.

- Accessibility: With a standard subscription, anyone can create sensitive content, increasing the risk of misuse.

Legal Implications

The legal landscape surrounding AI-generated deepfakes is evolving rapidly. Laws exist in several jurisdictions to address non-consensual pornography and the unauthorized use of celebrity likenesses.

Potential legal concerns for Grok Imagine include:

- Right of Publicity: Using a celebrity’s likeness for commercial or sexual purposes without consent may violate state or international laws.

- Non-consensual Pornography Laws: Explicit AI-generated content depicting real individuals can fall under statutes prohibiting revenge porn or non-consensual sexual imagery.

- Age Verification Compliance: In regions with strict online safety regulations, insufficient age checks could expose the company to legal liability.

The accessibility of Grok Imagine means that millions of users could potentially create illegal or harmful content, increasing the risk of lawsuits and regulatory scrutiny.

Comparison with Other AI Tools

Other AI video generators, such as Google’s Veo or OpenAI’s Sora, have implemented stricter safeguards to prevent the creation of sexualized content and unauthorized celebrity deepfakes. These safeguards include:

- Automatic detection of celebrity likenesses

- Filters to prevent sexualized or adult content

- Stronger age verification mechanisms

In contrast, Grok Imagine appears to prioritize creative freedom and novelty over strict content moderation, resulting in potentially harmful outputs.

Social and Cultural Impact

The emergence of AI deepfakes has broader societal implications. Unregulated access to generative AI tools can contribute to:

- Misinformation: AI-generated videos could be misused to spread false narratives involving public figures.

- Harassment: Celebrities and private individuals may face harassment due to AI-generated sexualized content.

- Erosion of Trust: Public trust in digital media could diminish if AI-generated deepfakes are widespread and untraceable.

As the technology becomes more advanced, these issues are likely to intensify, highlighting the importance of ethical AI development and responsible usage.

User Responsibility and Awareness

While developers have a primary responsibility to implement safeguards, users also need to exercise caution. Misusing AI tools to generate explicit content of real individuals can lead to legal consequences and reputational harm.

Recommendations for responsible use include:

- Avoid generating sexualized or explicit content involving real individuals.

- Use AI tools for creative, educational, or non-sensitive purposes.

- Report unsafe or unmoderated content to the platform.

- Educate users about the ethical implications of AI-generated content.

Grok Imagine’s Growth and Popularity

Despite the controversy, Grok Imagine has seen rapid adoption. Millions of images and videos have been generated within days of the app’s release, indicating strong public interest in AI-powered creativity. The company offers subscription-based access, making advanced AI tools widely available on mobile devices.

While the technology is impressive, the lack of effective safeguards underscores the tension between innovation and ethical responsibility.

Future Outlook

The Grok Imagine controversy highlights the urgent need for:

- Comprehensive Regulation: Governments may need to introduce laws specifically addressing AI-generated sexual content and deepfakes.

- Ethical Guidelines: AI developers should adopt industry-wide standards for content moderation and consent.

- Advanced Moderation Tools: AI platforms must implement automated and human-in-the-loop monitoring systems to prevent misuse.

If these measures are not implemented, similar incidents are likely to increase, putting public figures and private individuals at risk.

Conclusion

Grok Imagine’s “Spicy” video feature demonstrates both the potential and the peril of generative AI. While the app enables creative expression, its ability to generate explicit deepfakes of real individuals exposes serious ethical, legal, and societal risks.

The incident serves as a cautionary tale for AI developers, regulators, and users alike. Balancing innovation with responsibility is critical to ensuring that AI tools contribute positively to society rather than facilitating harm.